- What is Security Program Management

- Key Bugcrowd Terms

- Launching a Security Program

- Running a Successful Security Program

- Expectations for Managing a Security Program Over Time

- Tips for Managing a Successful Security Program

- Support Resources

This document addresses recurring questions and themes we receive from our customers getting started with Bugcrowd.

What is Security Program Management

The Bugcrowd Platform employs a flexible and efficient approach to Security Program Management. This framework allows our customers to quickly set up and manage program resources, construct and launch engagements, and manage submissions and workflows.

The Security Program Management provides you with key benefits:

-

Robust and enduring Security Programs means less time spent manually configuring

- The Security Program defines the scope, teams, SDLC integrations, reward pool and credentials. Each Engagement in the Security Program can inherit these resources.

-

Rapid Crowd utilization and results

- The Engagement is the new means for engaging the crowd to achieve an outcome. Launch or duplicate Engagements rapidly and utilize the crowd to obtain results faster.

-

Holistic reporting and insights

- Generate comprehensive reports, visualize insights, and extract data for all Engagements or a selected set within your Security Program.

-

All your submissions in one place

- Submissions are created by an Engagement, but reside at the Security Program. No more data isolation or moving submissions from one program to another.

-

Aligned to your security teams

- Flexibly define and manage your Security Programs, including scope and team member access, to align with your organization’s structure and preferences.

For more information on Security Program Management, please see Security Program Overview.

Key Bugcrowd Terms

Crowdcontrol

Crowdcontrol is the official name of Bugcrowd’s platform. You will use the portal to interact with Crowdcontrol. Through this portal you will be able to set up and manage your Organization, Security Program(s), and Engagement(s).

Organization

The Organization is the topmost layer for initial customer data and ongoing resource management. Resources are allocated from the Organization to one or more Security Programs by means of delegation. For example, the Organization allocates funds to Security Programs (reward pools) which are used to pay researchers. Users are created at the Organization level and given roles specific to the Security Programs to which they’re assigned.

Security Program

The Security Program is the container of target scope, submissions, and settings enabled on one or more Engagements of the same or differing types (e.g. Vulnerability Disclosure, Bug Bounty, Pen Test).

Engagement

The Engagement is a defined set of work and testing requirements that is presented to the crowd and is where submissions are reported. The majority of the configuration occurs at the Security Program and is inherited by its Engagements.

Engagement Brief

The Engagement Brief provides you the ability to specify the purpose, scope, and details of an engagement to enable researchers to understand the requirements to participate and test. After the initial kickoff call with your Implementation Engineer (IE), we’ll work with your team to create a concise and effective engagement brief.

Vulnerability Rating Taxonomy (VRT)

Bugcrowd’s Vulnerability Rating Taxonomy (VRT) is the basis by which we rate the technical impact of a finding (vulnerability). Using the VRT we assign relative priorities that range between critical (P1 - highest reward) to informational (P5 - no reward) for findings.

We recommend familiarizing yourself with this taxonomy before launching an Engagement. Your organization may have different preferences regarding the prioritization or rating of particular vulnerability types, which need to be discussed with your Implementation Engineer (IE) to be clearly communicated in your Engagement Brief and help ensure researchers are able to effectively focus their testing efforts.

Launching a Security Program

Your Bugcrowd Team

-

Implementation Engineer (IE): The IE is the primary point of contact for the implementation phase of new Security Programs and Engagements. The IE will be the project lead through the launch of your Engagement. Primary duties include project kick off, training, building the Security Program, Engagement, Engagement brief, and being the single point of contact during the implementation phase.

-

Solutions Architect (SA): The SA is the technical resource who will review the engagement and brief. They will provide recommendations as needed as well as assist with any complex and/or technical aspects of the implementation. The SA will assist the IE during the setup period, and will re-engage for any future complex and/or technical questions.

-

Technical Customer Success Manager (TCSM): The TCSM is part of the on-going success team. After the Security Program and Engagement is live, they will be responsible for day-to-day communication and regular check-ins. They’ll work collaboratively with you and your team to grow and mature the Security Program over time.

-

Application Security Engineer (ASE): This is the person who will be triaging all the inbound findings reported to your Engagement. Most Program Owners work very closely with their ASEs, and if you have any questions regarding submissions, they’ll typically be answered by the ASE directly on the submission itself via comments. Sometimes there will be multiple ASEs on a single Security Program.

-

Account Manager (AM): The Account Manager is teamed with your assigned TCSM and forms the on-going success team for the live Security Program. The AM is primarily responsible for renewal and all contract related topics.

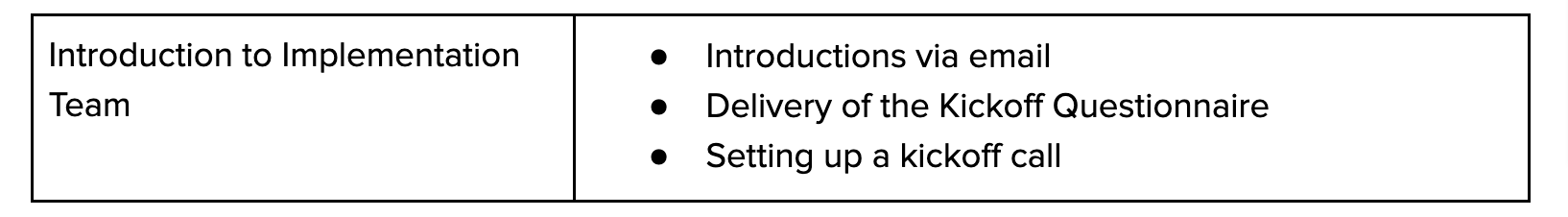

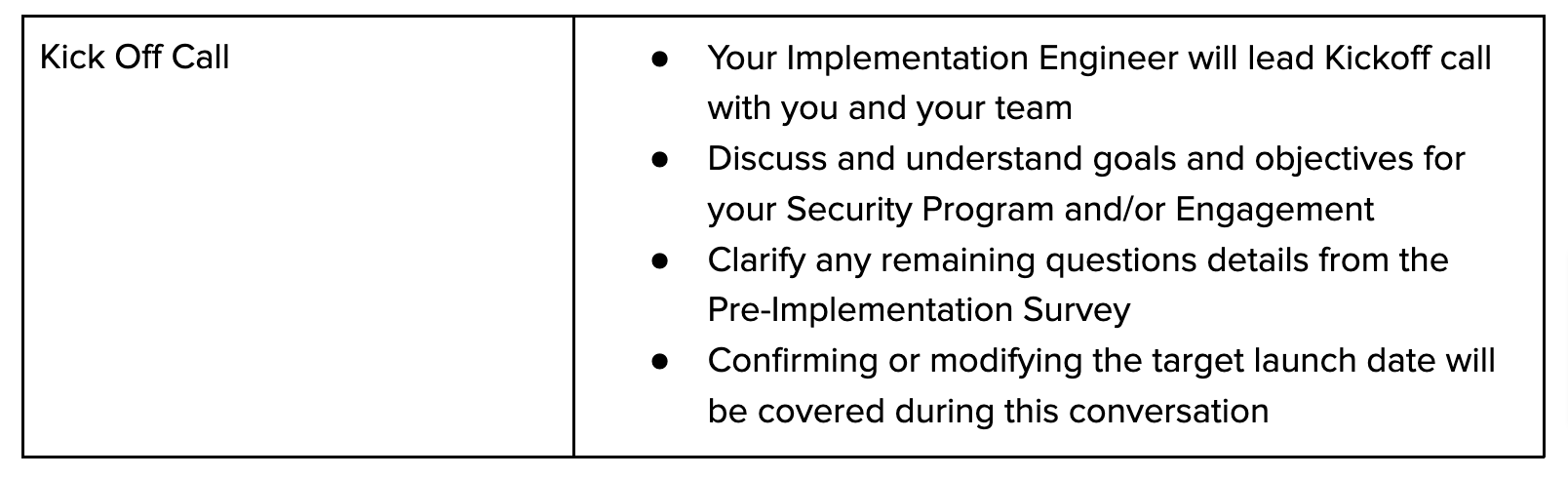

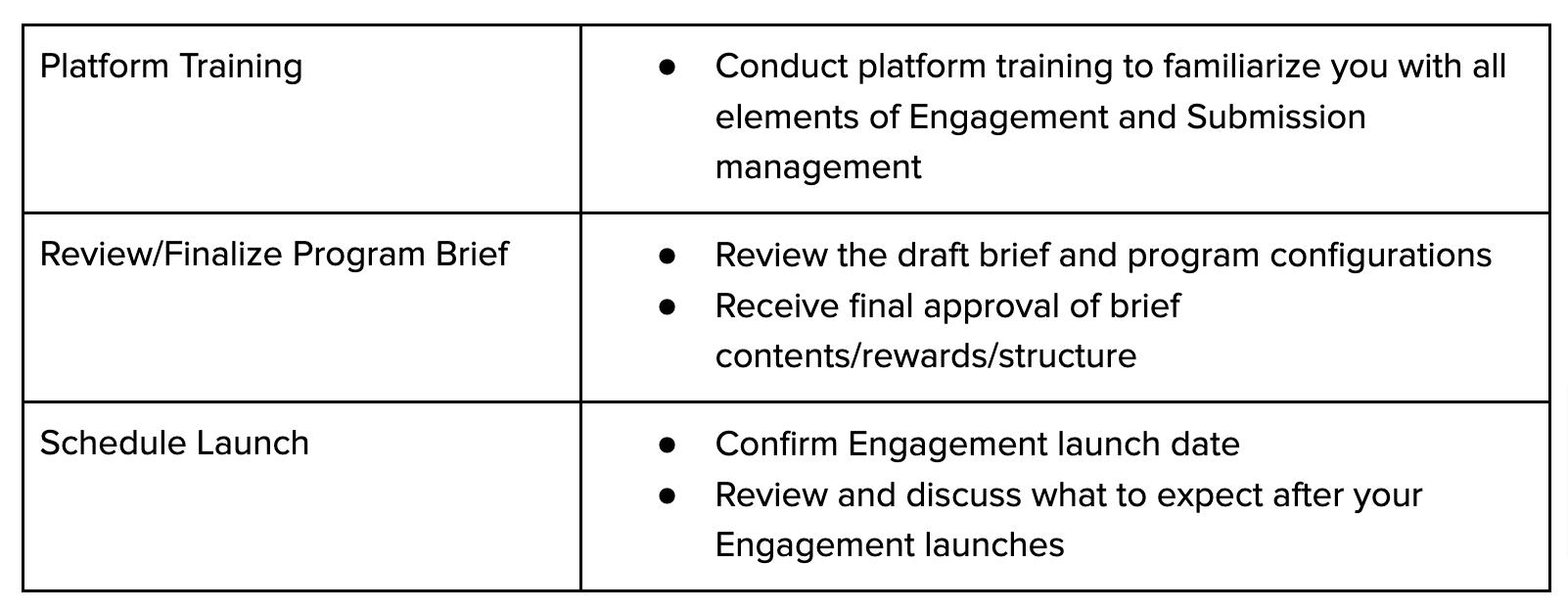

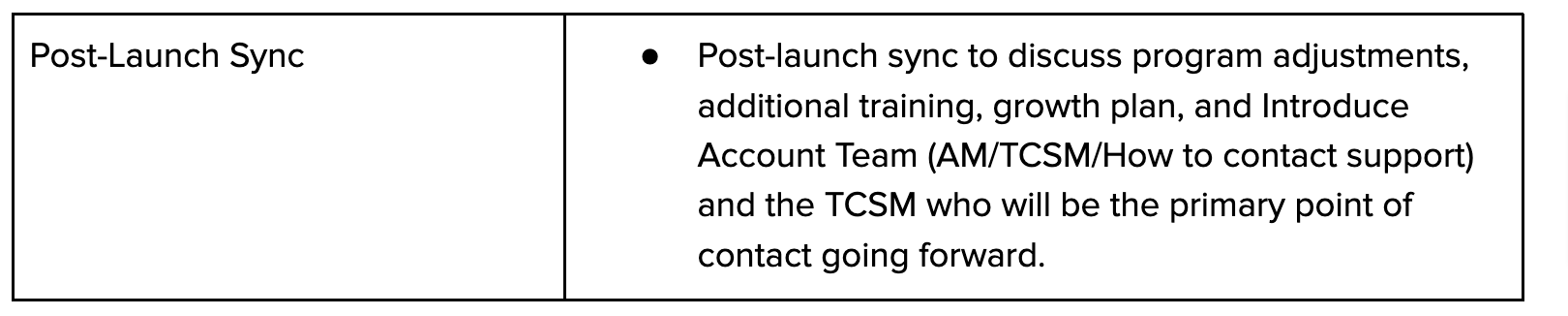

Timeline for Going Live (Typical Flow)

Week 0:

Week 1:

Week 2:

Week 3:

Week 4 - 5:

Shortly after purchasing Bugcrowd, you’ll be introduced to your Implementation Engineer by your Account Executive or another member of your account team.

Running a Successful Security Program

Running a Security Program requires consideration of various components and configurations, such as, Engagement type, scope, and timeliness if report acceptance and rewards.

Here is a breakdown of components for you to consider for your Security Program:

Managing Submissions

Once your Security Program is set up and your Engagement(s) are live, researchers will start testing and you will begin to receive submissions.

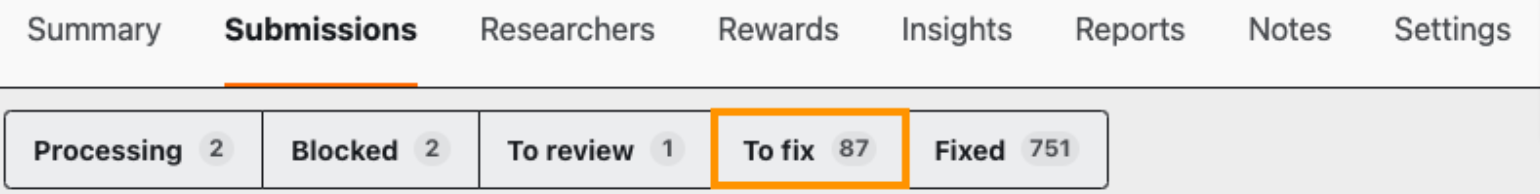

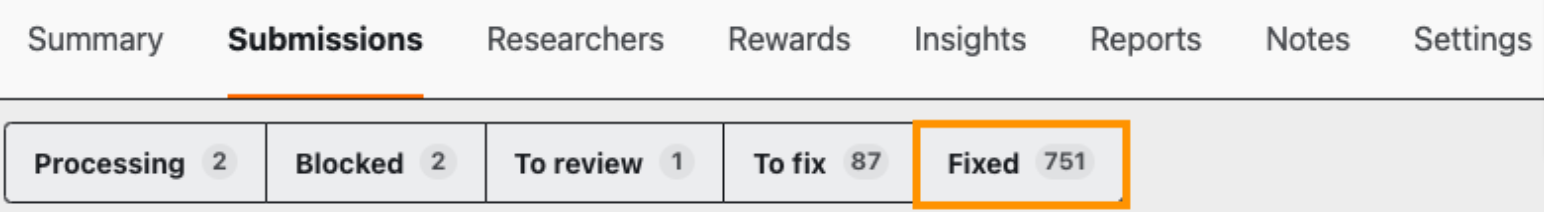

Depending on the size of your security team and your role, your responsibilities during the submission triage process may vary. Whether you manage one step of this process, or the entire thing, all submission management will take place on the Submission page in Crowdcontrol.

Here is a high-level overview of the general submission workflow:

-

Receive Vulnerability Notification: Once a vulnerability has been submitted to an engagement, a notification message will be sent to your email and to your Notification Inbox within Crowdcontrol depending on your notification settings.

-

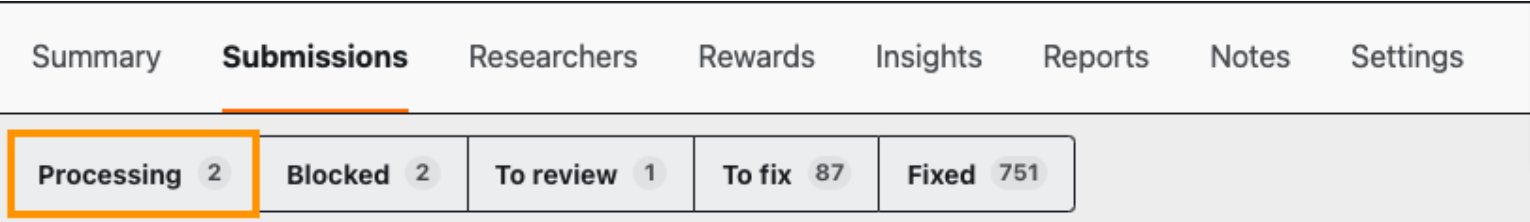

Researcher Submits: Researcher submits a Vulnerability Report (submission), and the submission will display in Crowdcontrol within the Submission page under Processing. The submission state will be New.

-

Submissions in New have not been triaged by an Application Security Engineer (ASE) yet or that the submission has a blocker on the researcher to provide more information.

-

Customers usually do not need to review submissions in the New state.

-

-

ASE Triage: A Bugcrowd Application Security Engineer (ASE) reviews the submission and verifies that it is valid, reproducible, in-scope, and not a duplicate.

-

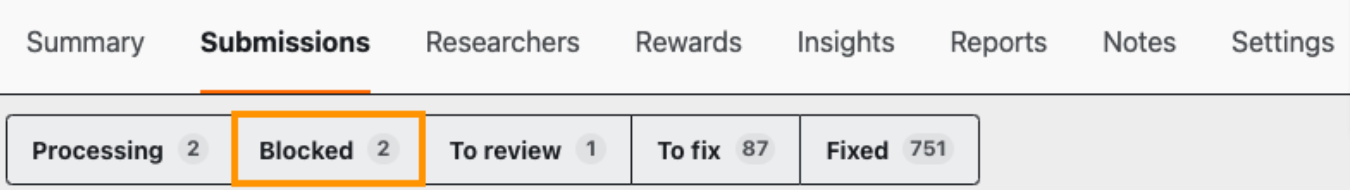

If the ASE needs additional information from you to continue triaging the submission, they will place a Blocker on the submission and provide details about what information may be required. This will display for you on the Submission page under Blocked.

-

-

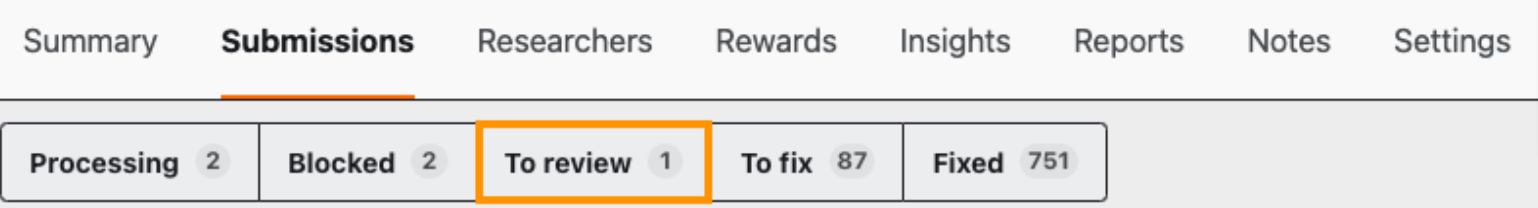

Submission ready for Review: If the submission meets the criteria for the submission to be further reviewed, the ASE assigns a priority to the submission based on the Vulnerability Rating Taxonomy (VRT) and moves the submission to the Triaged state. The submission will show as To review on the submission page.

-

Customer Review: Submissions assigned the Triaged state under To review are items you and your team need to review and accept.

-

The recommended and expected timeline for accepting submissions is one week.

-

If you expect it to take longer than this for a submission to be accepted, please leave a status update in the form of a comment to the researcher and provide context for how long they can expect to wait.

-

If the report is missing any information, contact the researcher directly using the reply to the message box below the report. To get a second opinion, leave a note, or include a team member in this process by using the leave a team note message box below the report. For more information, see Commenting submission functionality.

-

-

Customer Accepts: Once you agree that you intend to fix and reward the submission, you can accept the finding.

-

Confirm the submission’s priority level on the right hand side. Move the submission to an Unresolved state once you recreated and validated the vulnerability. An Unresolved submission indicates that this vulnerability needs to be fixed.

-

To do this, use the drop down arrow in the right hand corner and select Unresolved which is displayed under To fix on the Submission Page.

-

If integrated, your ticketing system will send a ticket notifying your development team.

-

If there are any questions or a submission isn’t valid or in-scope, we recommend not changing the state of the submission from Triaged. Instead, place a blocker on Bugcrowd Operations and leave a note as to what the issue is.

-

-

Customer Rewards: If you offer monetary rewards on your Engagement, when you transition a submission to Unresolved, a reward modal will display and ask that you provide reward details.

-

Please reward the submission at a dollar amount that’s consistent with what’s listed on the Engagement Brief. There will be a pop-up window to provide assistance, which will suggest an amount that aligns with the set priority of the finding. If the suggested amount does not align with your expectations, please let your TCSM know, and we can adjust accordingly.

-

We highly recommend leaving a comment showing your appreciation for the researcher’s time and effort (something as simple as ‘Thank You’ can go a long way).

-

Additionally, if you feel the researcher has gone above and beyond in providing value, we recommend leaving a tip to financially show your gratitude.

-

If the reward modal did not automatically pop-up, you can add the reward with the Add reward button.

Note: As soon as a submission is set to Unresolved, the researcher will get an email that notifies them the vulnerability has been accepted and is pending reward (if applicable).

-

-

Customer Fixes: Once the vulnerability has been fixed, move the submission to Resolved which is displayed under Fixed on the Submission Page. This will allow Bugcrowd to help catch any potential regressions that may occur after the fix has been implemented. This usually happens days to weeks after the submission has been accepted.

-

Note: Do not move a submission from Unresolved into Resolved until it is truly fixed. Marking a submission Resolved when it is not, can result in a researcher resubmitting a report for that vulnerability. We cannot count this as a duplicate since the original submission report was moved to Resolved (fixed), therefore warranting another reward payout.

-

Once a submission has been moved to Resolved, this marks the end of the submission’s lifecycle (unless a customer wants retesting).

For more information on the Submission page, please click here.

-

Bugcrowd & Customer Triage SLOs

We recommend you familiarize yourself with Bugcrowd’s customer service triage SLOs. For Security Programs with Vulnerability Triage packages including non-business days, triage will be included as specified.

We ask customers to maintain the following SLOs:

-

Accept triaged submissions within five days of being moved to Triaged by the Bugcrowd team.

-

If you expect it to take longer than one week for a submission to be accepted, please leave a status update in the form of a comment to the researcher (this enables them to plan their time accordingly).

-

Please be aware that lengthy delays in accepting submissions is heavily correlated with diminished researcher participation and lower total submission volume over time.

Good Practice Tip: We recommend having at least two Program Owners on a Security Program to ensure that if one is not available, that there’s continued coverage. Having two people helps ensure tasks get handled in a more expedient manner, further building trust and partnership with the researcher community.

-

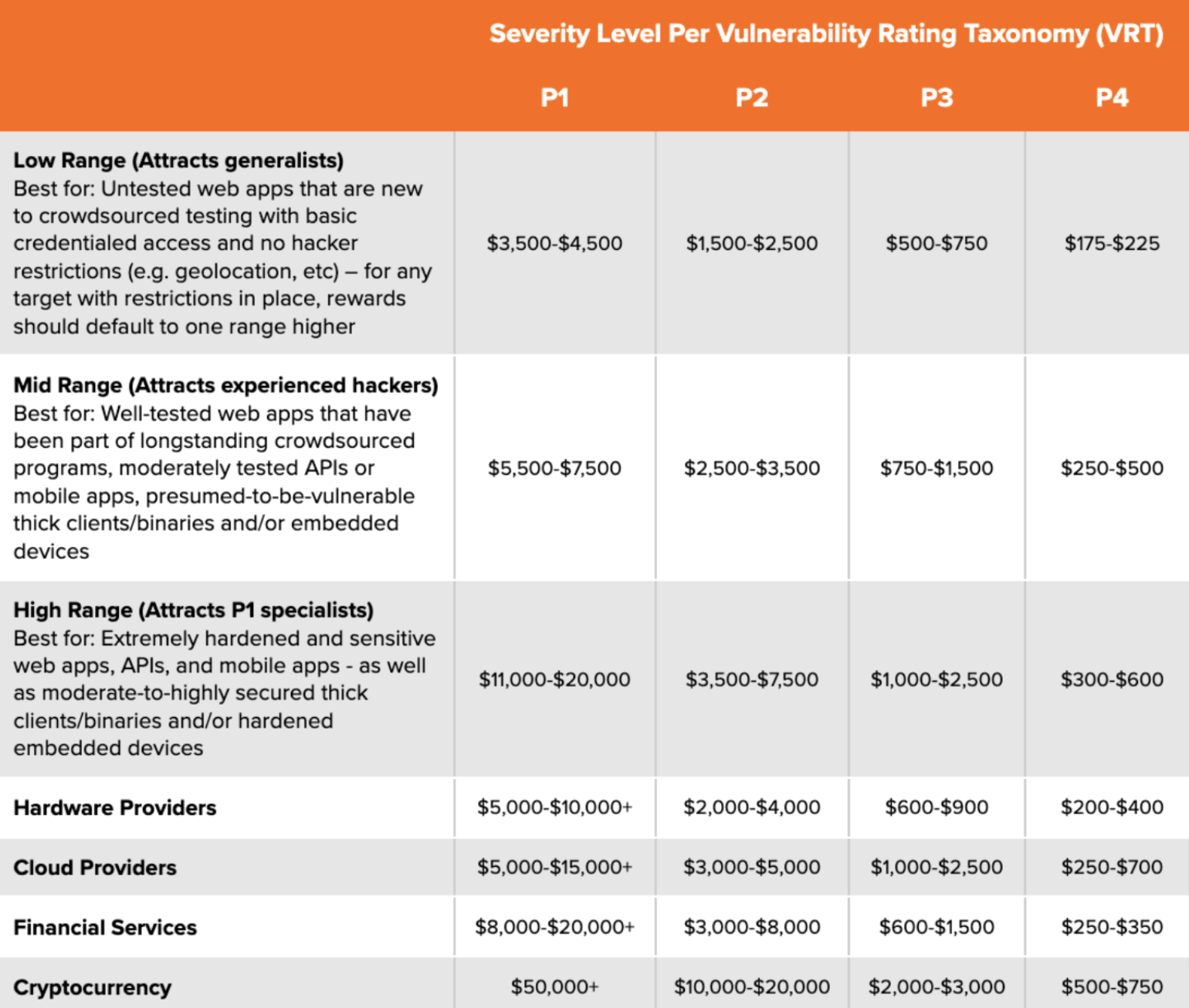

Reward Ranges Guidance

Many first time Program Owners may think that paying $20,000 for a P1 is expensive, and are considering if they can pay less for submissions. Before you make a decision around reward ranges, we advise you to do the following exercise:

-

Imagine you get breached tomorrow by a P1 level vulnerability (SQLi, RCE, etc), leaking a substantial amount of client and/or internal information on the web.

-

Considering you’ve been breached and it’s all over the news, does $20,000 still seem like too much to pay to know about the critical issue before it happens?

-

If knowing about the issue in advance isn’t worth at least $20,000, then by all means, pay less. However, in most cases, individuals would gladly pay 20 times that amount for the luxury of knowing about the issue before it got exploited. With this in mind, we recommend paying more than $20,000 for a P1 depending on the vulnerability type and industry standards.

-

Researchers have the ability to decide what engagements they test on, and will look for engagements that fairly compensate for the time and efforts.

While non-exhaustive, the following list details our recommended starting reward ranges and corresponding example target types:

-

Know that you only ever have to pay the max reward for an actual P1 finding. There are no false positives, you only pay when you’ve validated and accepted the issue. If there are no P1 issues, you never have to pay that amount. Please reach out to your TCSM if you need help determining the reward range that is right for your engagement goals.

Running out of Reward Pool

If you happen to run low or out of funding for your Security Program, contact your Account Manager (AM) to request a purchase order for an additional amount of your specification.

Your team may also reach out if your program dips below a certain threshold to proactively get your pool replenished. We recommend having enough in your reward pool to cover at least three P1s at any given time while the Engagement is live.

Using Bugcrowd’s Vulnerability Rating Taxonomy (VRT)

Bugcrowd’s VRT is something we’ve collectively built and refined over the course of hundreds of programs. It is a classification system for ranking known vulnerability types:

- P1 (critical)

- P2 (high)

- P3 (medium)

- P4 (low)

- P5 (informational)

These priority levels correspond to set reward ranges on the Engagement Brief, which determines the range in which a given submission gets rewarded. We recommend you familiarize yourself with the VRT.

The VRT is open source. It is open to community (customer, staff, and researcher) suggestions and contributions, which happen regularly. Based on internal conversations, we then update and adjust the VRT as needed. New VRT versions are regularly released on a quarterly or half-year basis. Major changes or necessary modifications might result in a prioritized version release. You can review the VRT on GitHub.

The VRT is an industry standard that we encourage all Program Owners to abide by. Leveraging the VRT enables Program Owners to implicitly signify discrete reward ranges for known vulnerabilities, thus removing any ambiguity for participating researchers. This saves you from having to enumerate every possible vulnerability and the associated reward amounts. As well as preventing confusion from researchers around how things should be rated or what should be submitted.

Deviations from the VRT are perfectly acceptable. However, if you deviate from or have defined exclusions to the VRT, we recommend you enumerate the changes in your Engagement Brief so this is clearly communicated to researchers participating in your engagement.

Importing Known Issues

Many times Program Owners have done some degree of security testing prior to running a Security Program (e.g. via pentests, internal testing, automated scanning, etc). Provided all the findings haven’t been remediated by the time your Security Program starts, you likely don’t want to have to pay out for issues that you already know about. To avoid this, you can import known issues.

By uploading your known issues to the platform prior to the program start, it allows our ASE team to dedupe against those findings, and also provides researchers with a level of trust and visibility that issues marked as duplicate truly existed prior to their report.

When importing known issues, there are a few options:

-

Highly Recommended: Import the known issues into the platform via CSV or API. For details, see Import Known Issues.

-

Callout: When importing known issues, it’s important that you include enough information for our ASEs to be able to fully replicate the findings and be able to de-duplicate based on the provided information.

-

Example: if importing an XSS finding, instead of saying “XSS on example.com/example/”, the description should state the vulnerable parameter, the injection used, any steps to reach the vulnerable functionality, and so on.

-

-

List out the known issues on the Engagement Brief. This is an extremely helpful and well-regarded show of good faith for researchers. By explicitly listing out the known issues on the Engagement Brief, it allows researchers to focus their efforts on finding and reporting things that you don’t already know about.

-

Choose not to import any known issues.

- Please note, by doing this, be aware that the expectation from both the researcher and Bugcrowd side, will be that all valid, in-scope findings will be rewarded, even if they’re already known on your end.

Provisioning credentials

Below are best practices and recommendations for provisioning credentials for researcher testing:

-

Testing on apps without any authenticated access (e.g. marketing sites/etc) will often result in a low number of submissions and activity, since there’s little to no dynamic functionality for researchers to test against. When selecting targets, authenticated targets will almost always be more attractive and meaningful assets to have to test as part of an Engagement.

-

If there is an authenticated side of the in-scope application, we should make every effort to provide credentials to researchers. Similar to the above, testing something without credentials, while it may seem “black-box”, is an ineffective way of truly understanding the relative security of an app, as well as identifying vulnerabilities.

-

If we are able to have credentials, we typically ask for two accounts per researcher, so as to allow for researchers to be able to perform horizontal testing. Without two sets of credentials, we potentially leave an entire class of issues off the table (IDORs, most notably). Having two sets will often lead to findings, and is highly recommended (even at the cost of fewer total testers). One notable exception here is if we’re able to provision each researcher with a single high-privileged user with which they can then self-provision additional users as needed. In which case, one set is ok.

-

In line with the above, a general guiding rule around credentialed access for researchers is that the more attack surface we can put in front of researchers, the more likely we are to find issues. With this in mind, we always want to provide researchers with as high of a level of access as we’re able to. Instead of giving all researchers low-priv users, we should give them both low priv and high priv users. Allowing them to attempt privilege escalation attacks, and enabling them to test the most functionality possible.

-

Sharing credentials is never a recommended or ideal practice, and should be avoided whenever possible. All it takes is one researcher accidentally changing the password, and then everyone is locked out - losing their momentum and interest, and are unlikely to return to full steam even when the issue is resolved.

-

If this is a multi-tenanted application, we ideally want to give researchers access to two tenants (or organizations), so they can do cross-tenant testing. In an ideal world, each researcher would have their own tenant. The goal of this is that not only do we want researchers to be able to be able to perform horizontal testing inside their own org, but we also want to ensure that they’re also able to do cross-org testing.

-

Understand how many total sets of credentials will be able to be created and handed out to researchers over the course of the program. This number will inform the maximum number of researchers and often the starting crowd size as well. As noted above, we ideally want to provide two sets per researcher, so this should be considered when thinking about how much runway we have in terms of credentials.

-

Requiring researchers to provide personal information to set up accounts (credit card numbers, social security numbers, etc) should be avoided. This can often lead to researchers not being willing to participate in your engagement.

- We recommend instead using staging, since accounts can often be pre-populated independent of actual user info. Finding a way around any barriers to entry is important in being able to get researchers involved and activated.

Managing Testing Environment

Where possible, we recommend using pre-production and/or staging environments (as opposed to production). However, in situations where production makes the most sense, we’re always in favor of whatever provides researchers with the best chance of success.

This in mind, there are a number of benefits to not testing against production, some of which being:

-

There’s usually no customer PII present on non-prod environments (in the case that someone finds a vulnerability that exposes other user’s data).

-

There’s no chance of affecting actual users if the staging environment is made unstable from researcher testing.

-

If there’s anything to be purchased on the application, it’s usually a lot easier to provision fake credit cards/SSNs/etc on non-prod environments.

-

Testing against a staging/non-prod app can allow us to test against a newer version of the app before it hits production. Thereby identifying issues before they’re exposed to the public.

-

It’s typically easier to mass create credentials for researchers to test with.

-

It’s similarly much easier to restrict access to only testing researchers (only allowing access from a specific IP address, etc). Providing better visibility into researcher testing and coverage.

Note: If you are hosted by a third party such as AWS, Azure, or Google Cloud it is your responsibility to file any relevant or required pentesting requests through the vendor you use. Here are some commend vendors and their policies:

-

Google Cloud: As of December 2018, Google does NOT require any formal pentesting request to be filed.

-

Azure: Review the guidelines. As of December 2018, Microsoft does NOT require any formal pentesting request to be filed. However, make sure to follow the pentest rules of engagement.

-

AWS: Review the guidelines.

We recommend you always double check with your vendor, as policies can and do often change.

Expectations for Managing a Security Program Over Time

Your Security Program will need to grow over time. “Set-and-forget” is not a phrase that applies to any successful program. Running a program requires continual investment and involvement. We’re well versed in adjusting as needed to promote optimum results, so we only ask that you be an active participant in this process. Our Solutions Architects will provide a full review of your program and brief every 6 months to provide recommendations for any program changes to drive success.

With that said here are some additional tips for managing your Security Program over time:

Relationships Are Everything

Few things are more impactful to your Security Program than the relationships you have with the people who make it a success:

- Researchers

- Application Security Engineers (ASEs)

- Your success team - Technical Customer Success Manager (TCSM) and Account Manager (AM)

Like your own team of engineers, Researchers want to be assured that their efforts have an impact. Those who understand their role in the software development lifecycle (SDLC) are far more likely to continue focusing their efforts on your Security Program: Doing deeper testing and coming back for more over time.

The relationships you build here will pay dividends well into the future. Furthermore, collaboratively working with your Bugcrowd team (ASE/AM/etc) will help us help you have the best possible Security Program.

Managing Researcher Activity

Most Security Program and Engagements experience the highest level of researcher activity during the first few weeks. As such, this period is a critical time to demonstrate to researchers your level of involvement. Many researchers will report one bug, gauge the experience with the Program Owner, and then make a determination as to whether or not they will continue with the program.

As the Security Program matures and the low hanging fruit is picked clean, we look to three primary levers to ensure continued value and activity:

- Reward

- Scope

- Crowd size

Our team is experienced in identifying which to push, and when, in order to promote success as your program matures.

Additionally, sometime after demonstrating success with a Private engagement, your Account Manager may recommend taking the program Public in order to continue to recruit new and varied Researcher involvement. This will involve opening your engagement to the broader Bugcrowd Researcher community, which can lead to fresh testing and submissions because you are accessing more of the Crowd and their skills.

Transitioning Your Security Program’s Purpose

Over an extended period of time, as vulnerabilities are surfaced and remediated, we expect a reduction in the number of submissions. Hopefully your team will have also experienced positive changes in workflow, remediation practices, your relationship with the Development team, and perhaps their best practices as well. All of these factors contribute to reducing risk and thus overall program submissions. At this point it’s important to reframe the role your Security Program will play in your broader success plan. Where previously the Security Program may have served as a primary identifier of issues, over time it may shift down the line of defense and find its greatest value as a place for persons to responsibly disclose issues as they sporadically arise.

This can also lead to opening up other security testing opportunities and the need to start a new Security Program or utilizing other Bugcrowd products.

Tips for Managing a Successful Security Program

We first recommend reading 5 Tips and Tricks for Running a Successful Bug Bounty Engagement blog to provide a good starting framework. Additionally, here are some other good tips and practices we recommend for running a successful Security Program:

FRUIT

We have a simple acronym to help highlight some core beneficial characteristics of effective Program Owners: FRUIT. Fruit stands for the following:

-

Fair: Executing on the expectations set on the Engagement Brief and rewarding researchers for their effort. Remember that your brief is essentially a contract between your organization and the researchers, and it is ultimately your responsibility to ensure that the content accurately reflects the information you want to be conveyed to researchers.

-

Responsive: Accepting and Rewarding findings in a timely fashion and quickly responding to any questions from Bugcrowd or the researchers. Lengthy rewarding and response times jeopardize researcher goodwill and interest in participating. Two tips to having excellent response times:

- Have two Program Owners to help ensure continuity and faster response times.

- Reward submissions within 5 days or less after acceptance.

-

Understanding: Recognizing that researchers are here to help. Treat them with the same respect that you would if they were an extension of your own team (because they are)!

-

Invested: Doing what it takes to make a Security Program successful. Whether that means getting more credentials, increasing rewards, or increasing the scope. Our most successful Security Programs are led by deeply invested Program Owners. An additional corollary to this point is to recognize that we want researchers to find vulnerabilities! Creating an environment that is conducive to this goal means being open to working with your TCSM on broadening access to more parts of your target for testing purposes.

-

Transparent: Being clear and honest with researchers. If something is downgraded or not an issue, offering a full and clear explanation helps the Researcher to appropriately refocus their efforts. For engagements with reward ranges, it’s invaluable to provide extensive detail about submission types that map to each reward band.

Developing Successful Partnerships with Researchers

Researchers have a choice about how and where to spend their time and effort.

When building and managing your Security Program and Engagements, ask yourself whether you would enjoy and want to work under the expectations and requirements you have set for the researchers. Here are some key questions to consider to assess if your Security Program is setup to encourage researchers to spend their time and effort to testing on your Engagement:

-

Does my Engagement Brief clearly outline my expectations and requirements for testing my targets and environment?

-

Are the rewards I am offering competitive for the target types I want tested?

- If my Engagement has additional requirements (geo restrictions, background checks, compliance requirements, etc.) are my reward ranges appropriate to incentivize the researchers to put in the additional effort needed to test?

-

Do the reward ranges incentivize Researchers to select my Engagement to test on and continue to come back to continue testing?

-

Have I provided the materials and resources the Researchers need to understand my environment to effectively test?

Strong partnerships with Researchers is one of the key factors that contributes to the success and health of a customer’s Security Program.

Your role in a Managed Service

Bugcrowd’s managed services are designed to reduce the amount of resources and time you have to expend on your Security Program. That said, it’s still important to understand the role you play in ensuring continual program growth and success.

Our team is skilled in understanding the levers of Security Program health, and will periodically advise when further actions or decisions are needed on your part. The more proactive you are during this process, the more likely you are to see better results from the Security Program as a whole.

Data-based decisions

Bugcrowd has run over 5,000 managed programs to date, which has helped us amass a hefty repository of program success metrics. Please understand that all of the advice outlined in this document is based on our deep understanding of how to effectively manage outstanding programs for our customers.

Support Resources

For any other questions or issues, visit the Bugcrowd Customer Support Center or submit a support ticket through the Bugcrowd Support Portal.